>

Abacusynth (Hardware)

Synthesizer, 2022Traversal

Generative Audio/Visual, 2023Rec Lobe TV

Multimedia Installation, 2022Doors we Open

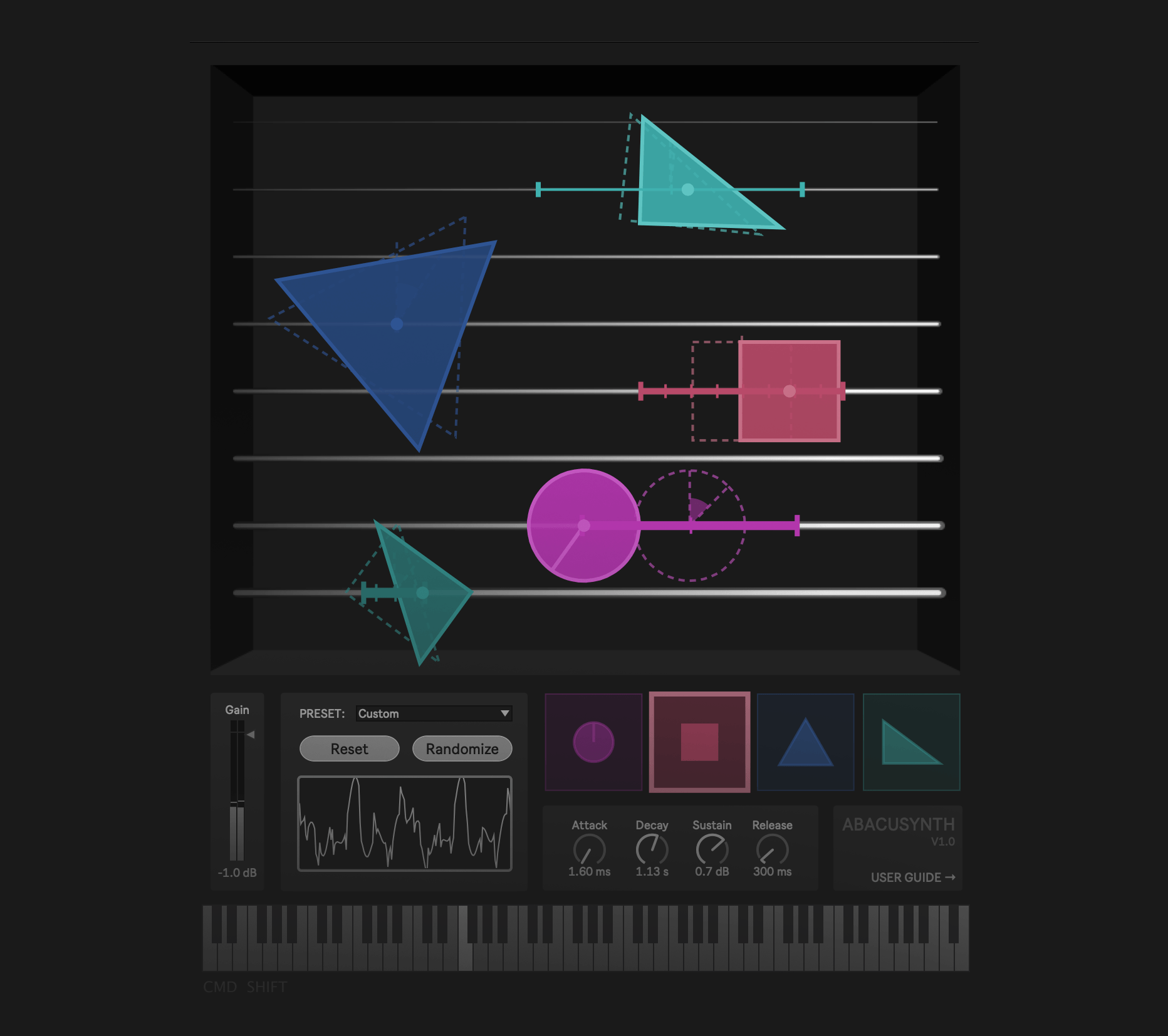

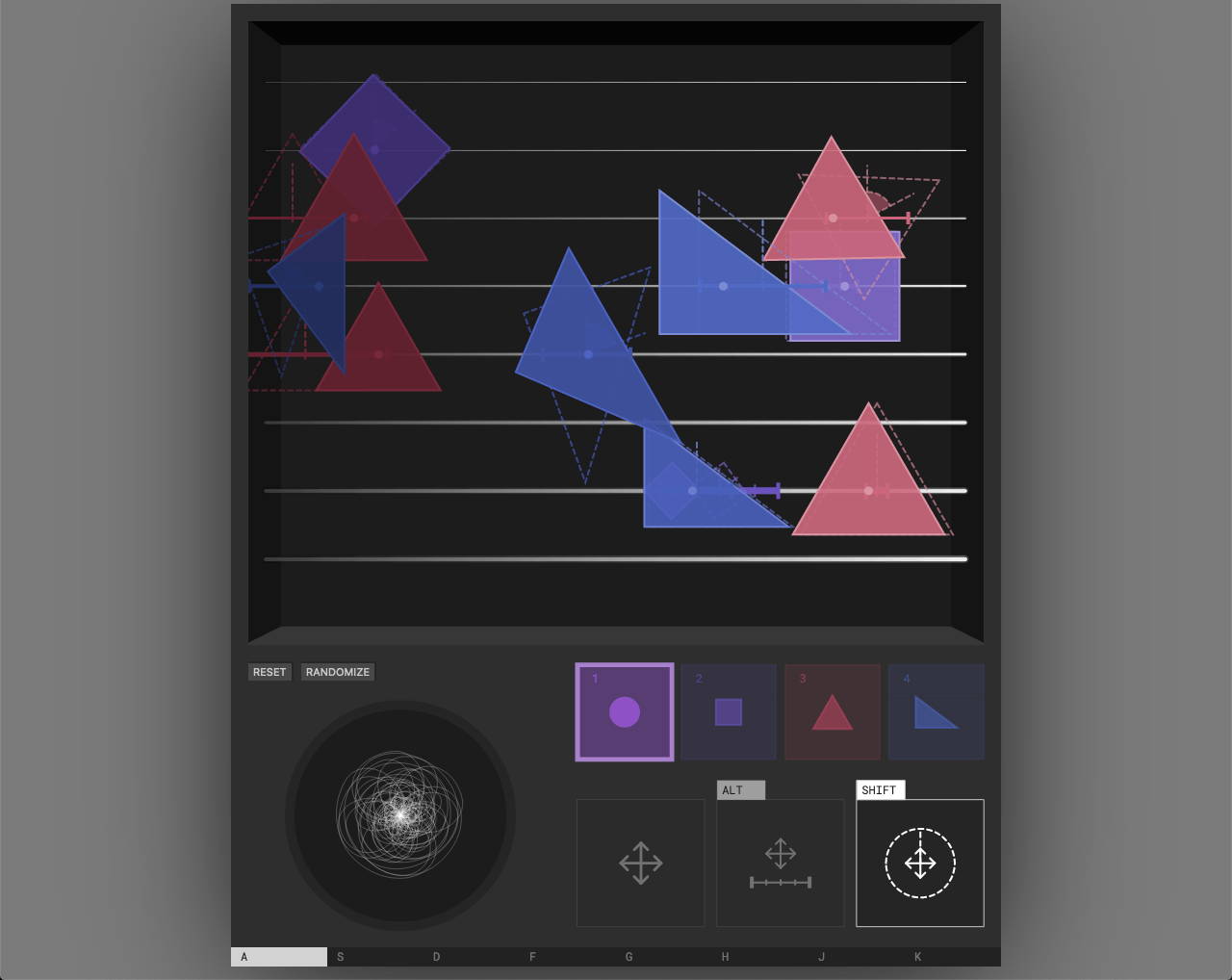

Soundtrack, 2022Abacusynth (Plugin)

Max for Live Plugin, 2022Pendular

Collaborative Performance/Musical Interface, 2021Puncture

Collaborative Performance/Musical Interface, 2021Abacusynth (Web)

Web/Audio, 2021Tirtha: An Architectural Opera

Album/Multimedia, 2020Musical Garden

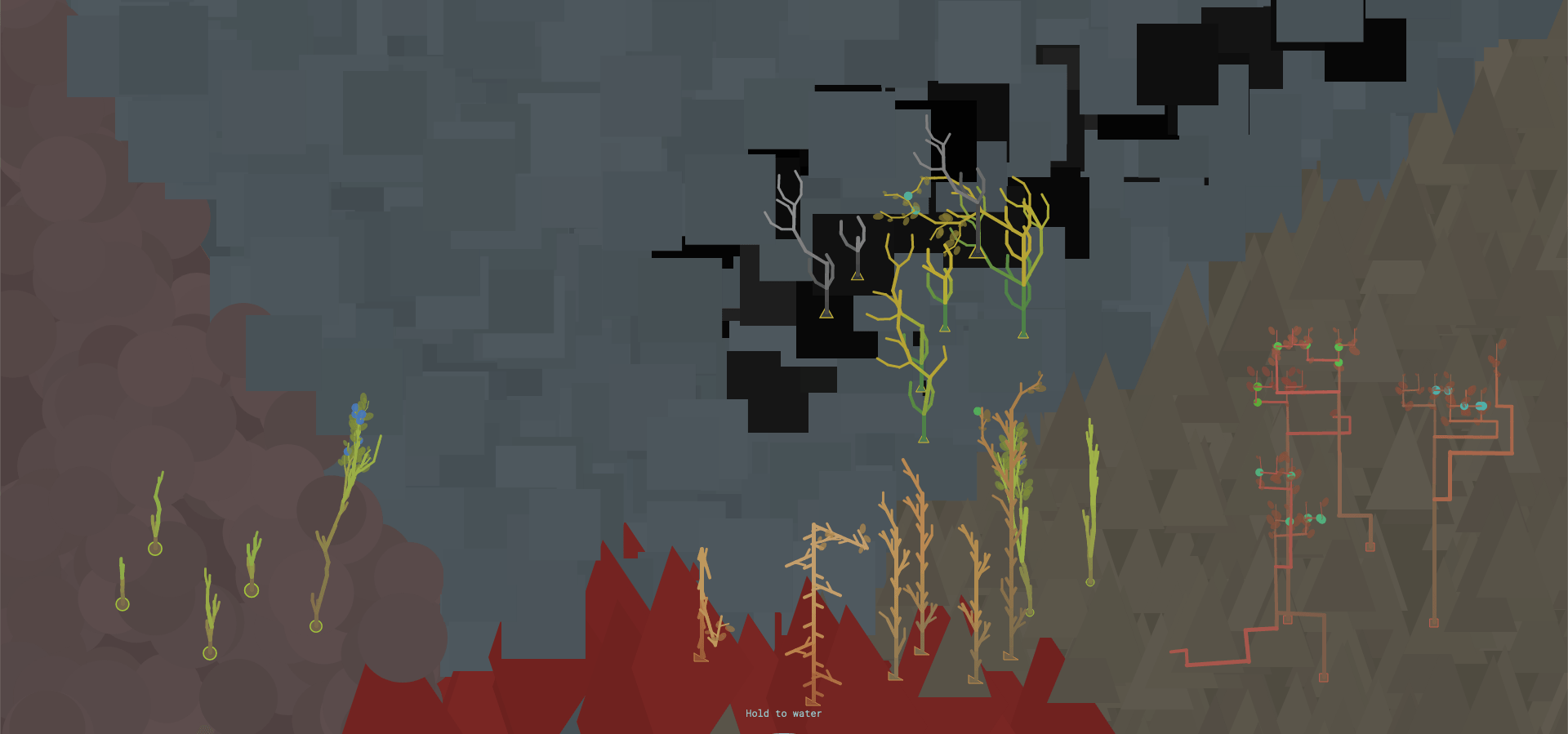

Web/Audio, 2020Shape Your Music

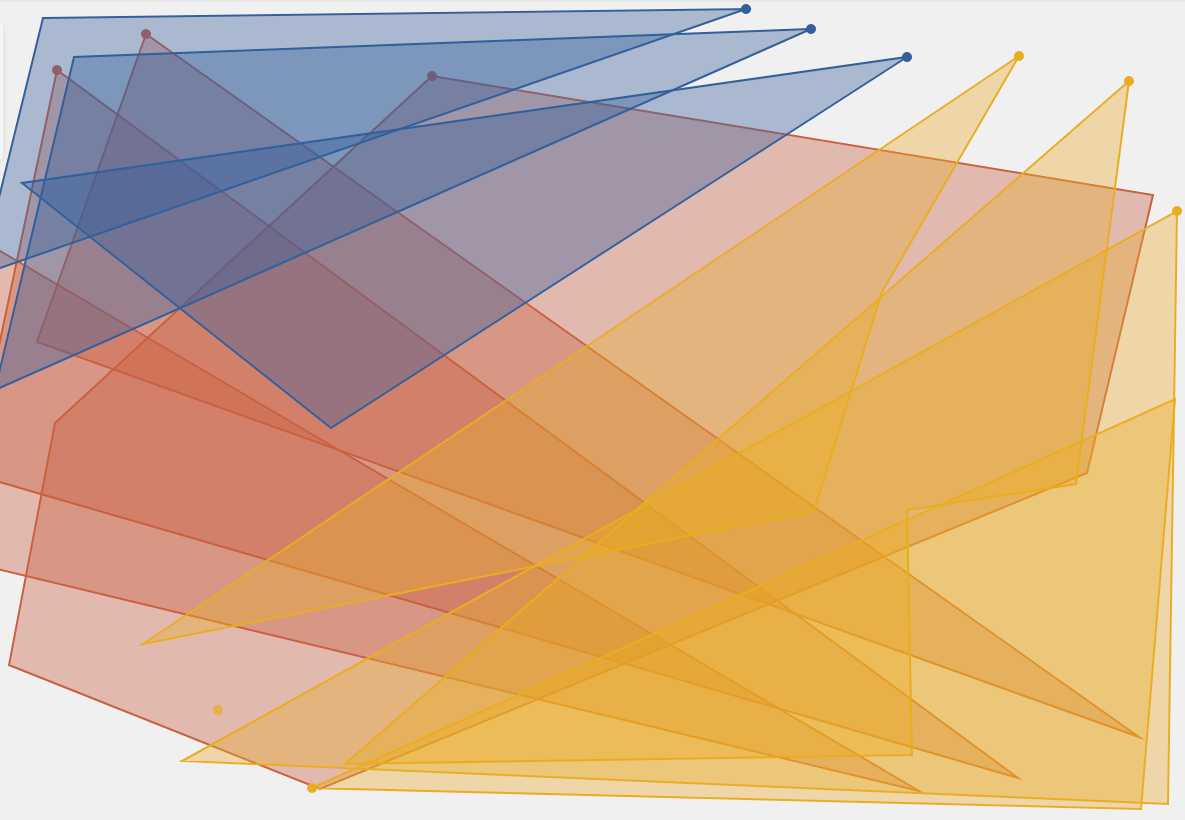

Web/Audio, 2016◣

Animation Experiment, 2020Texturizer

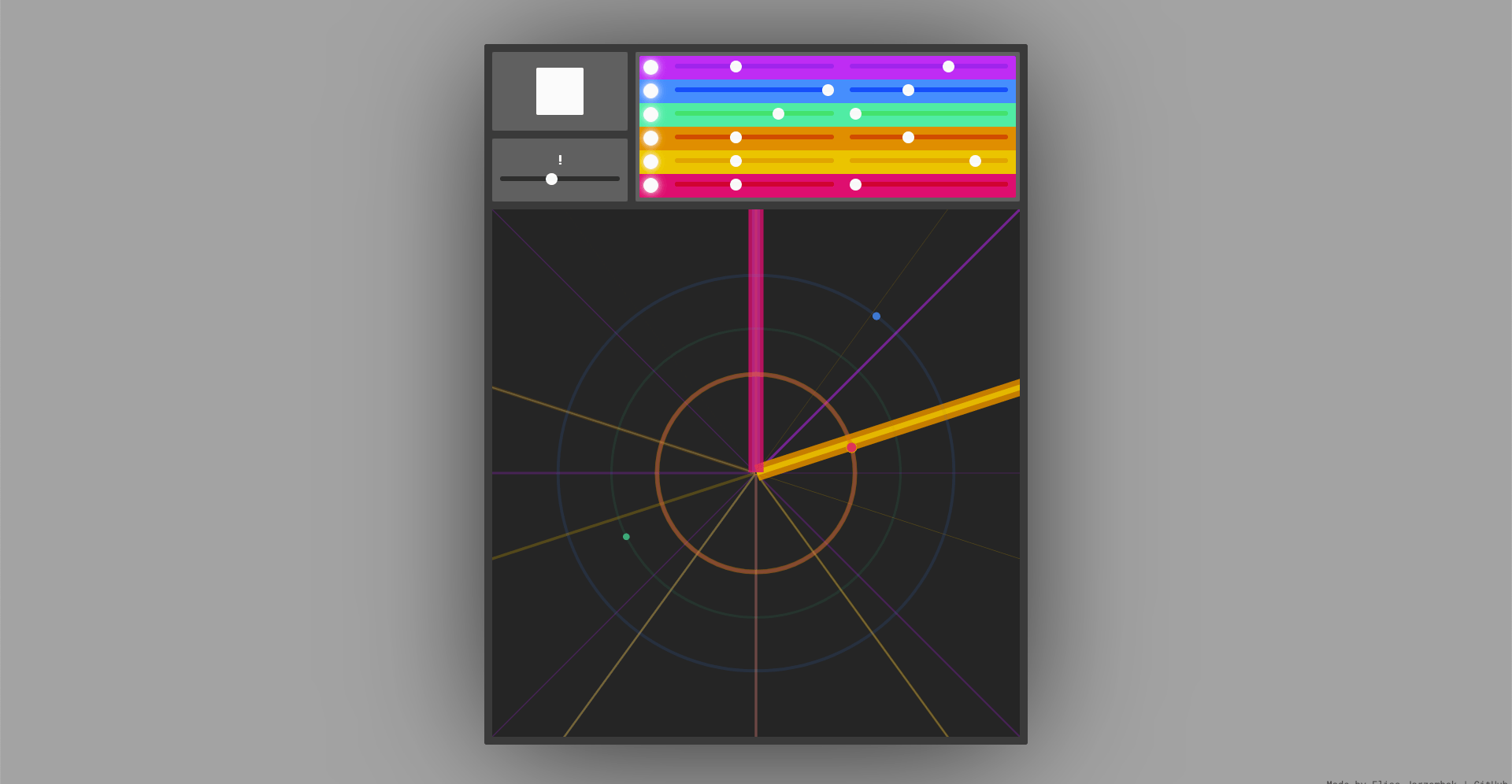

Web/Generative, 2020Drum Radar

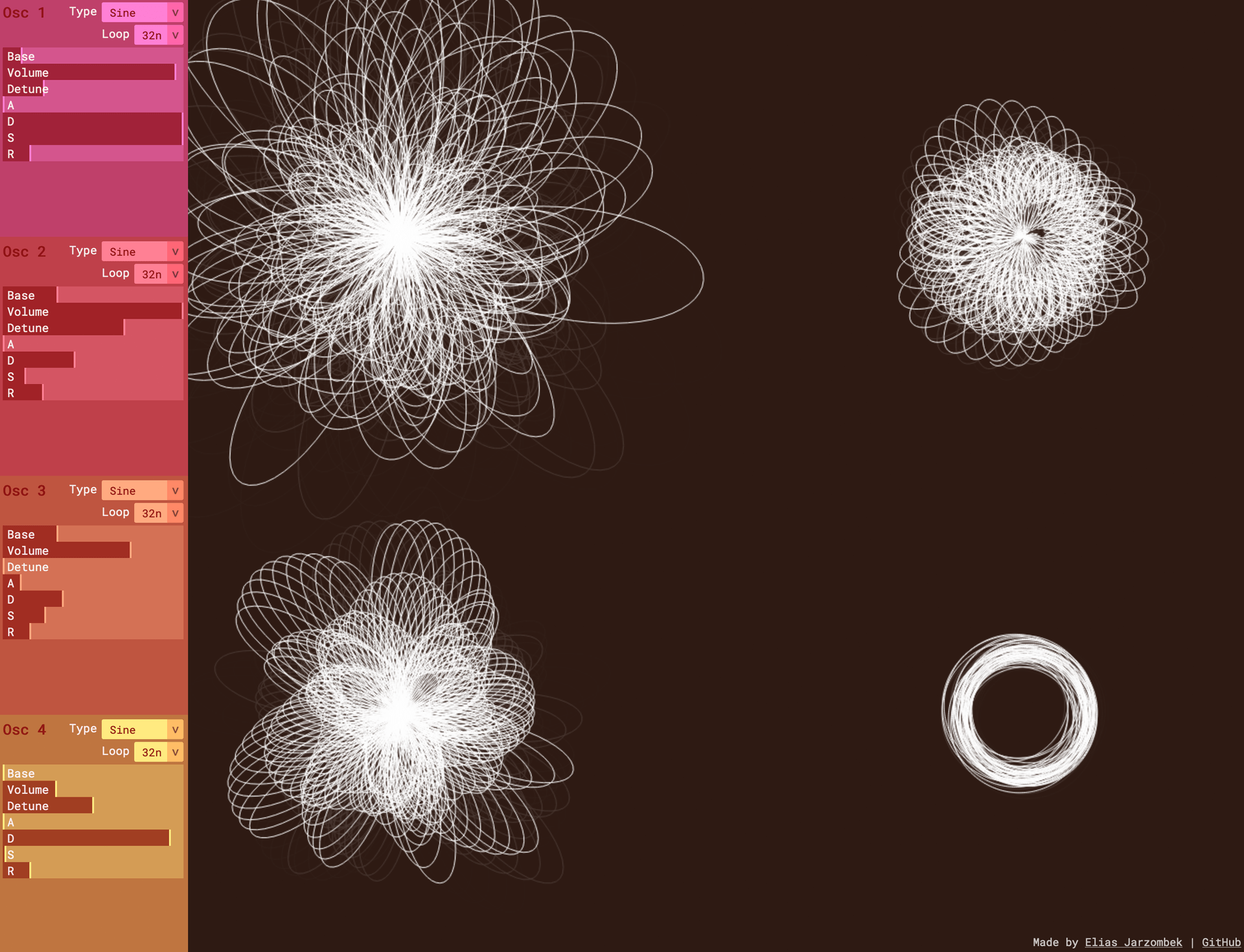

Web/Audio, 2020Web Synthesizer

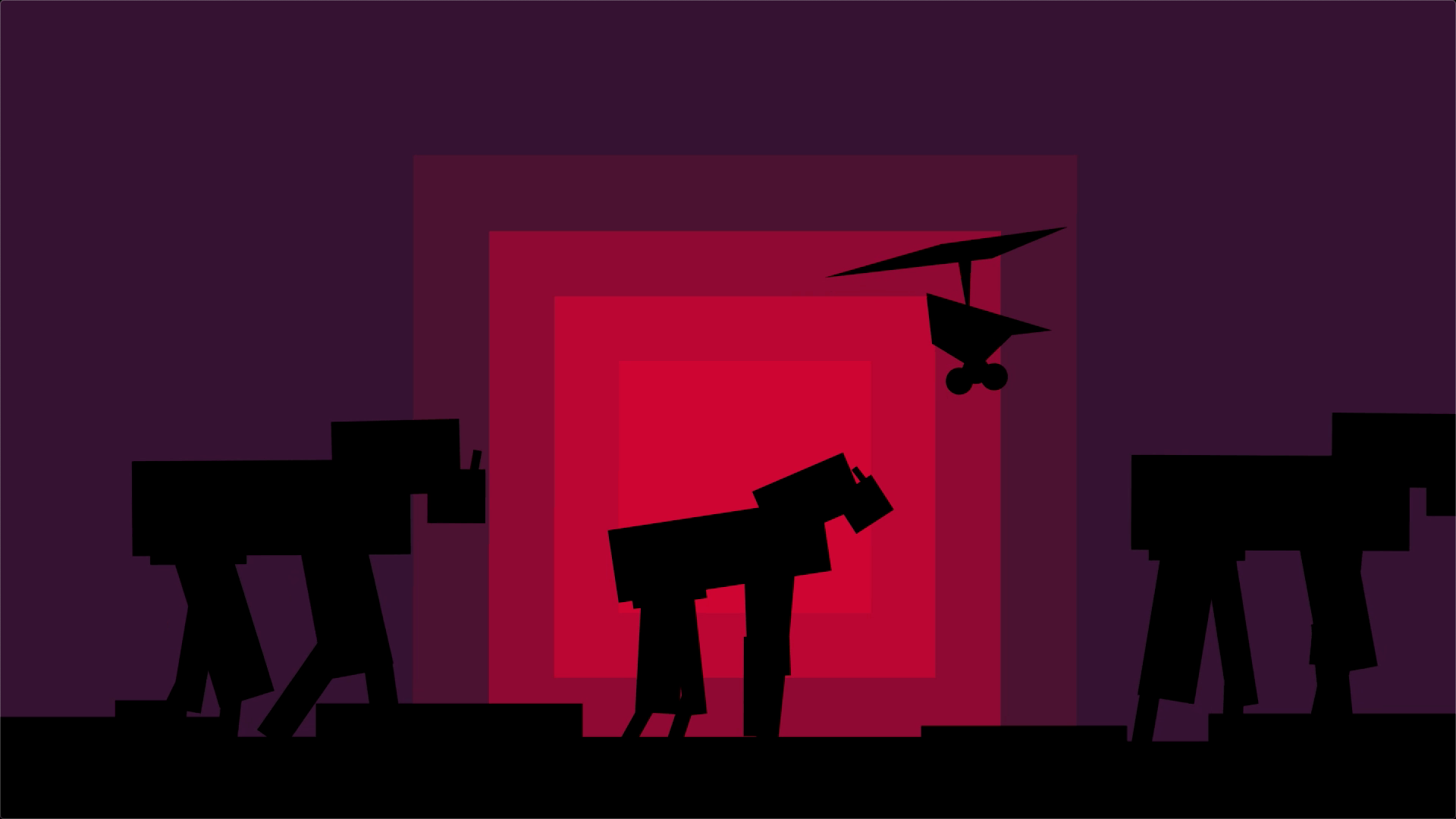

Web/Audio, 2020Vec Tor Bel

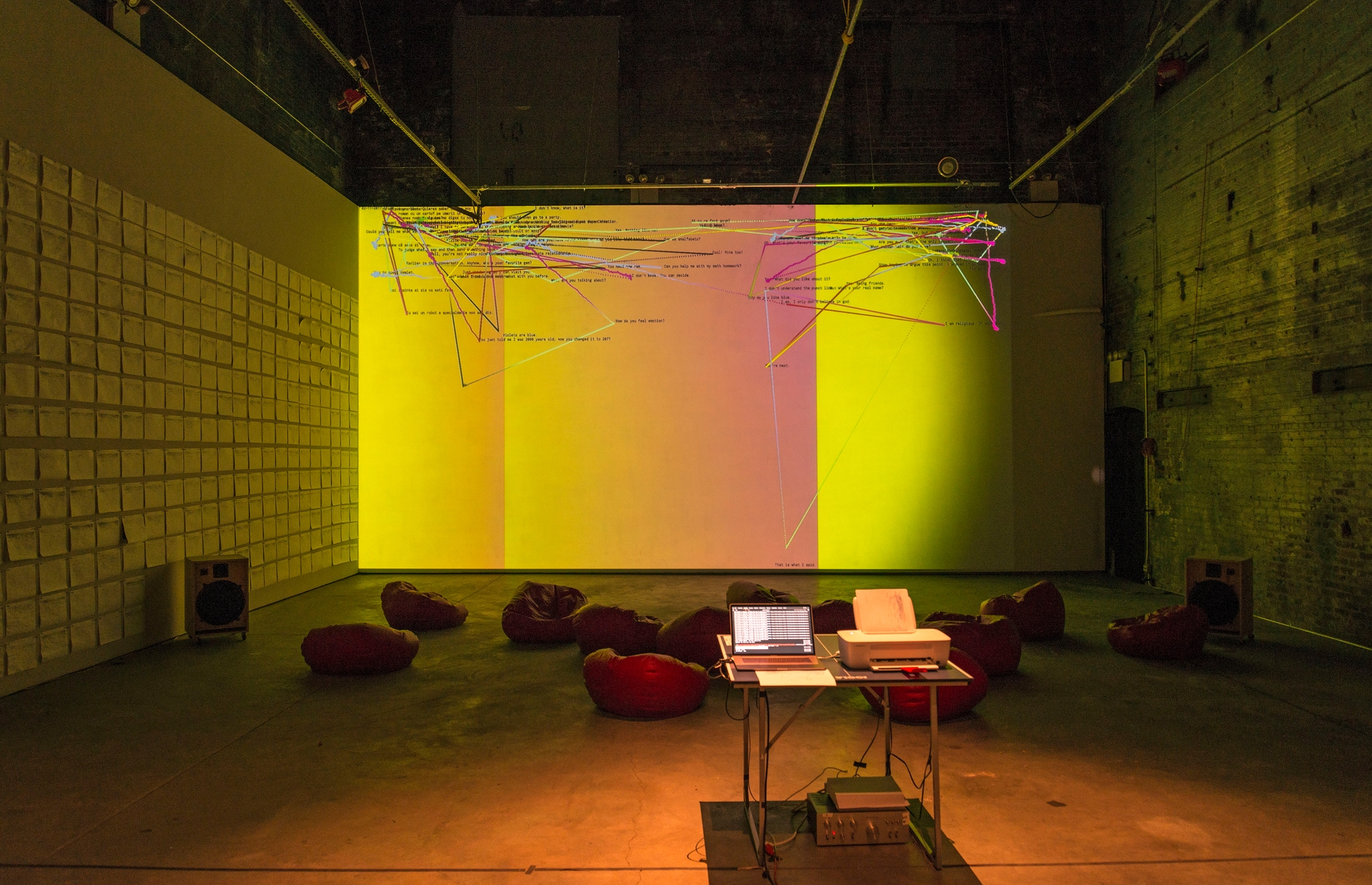

Installation, 2018Verbolect

Installation, 2017